Hashtag scraper

A better way of scraping

Common wordlists and mask attacks can crack a large amount of passwords, but to get even the last ones we have to get creative. Passwords are slowly turning into passphrases: several words packed together as the famous XKCD comic pictured some time ago.

This means that we have to find a way to guess what people are actually thinking and how they usually combine words.

Twitter to the rescue

Luckly we can scrape Twitter and extract information from people tweets using Twitter API. With its 140 chars limit and the usage of hashtags, that's a wonderful example on how the average human loves to pack words together.

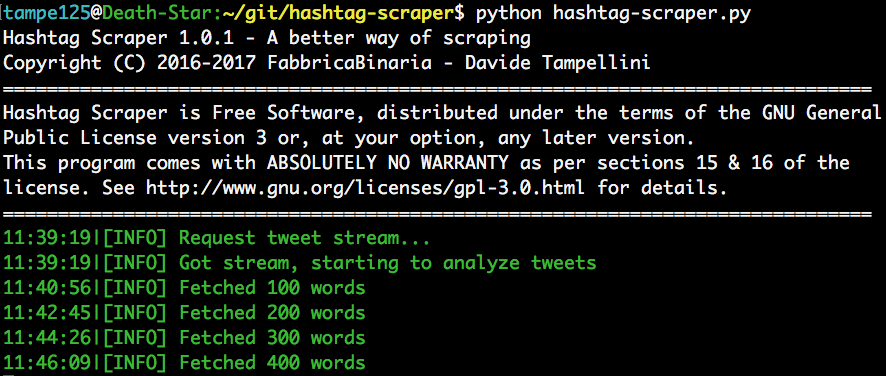

With Hashtag Scraper you can directly connect to the main entry point of Twitter and get flooded by all the tweets in real time (there's a reason if it's called firehose).

How to use it

Its usage is pretty straightforward: first of all you have install the required libraries running

pip install -r requirements.txtThen you have to create a Twitter app; copy the file settings-dist.json to settings.json and fill it with the access details you just got for your App (API key, API secret, Access Token and Access Token Secret).

Finally, you're ready to go! You can launch the scraper with the following command:

python hashtag-scraper.py [OPTIONS]

-l LANG --lang LANG Filter tweets by language (default to 'en')

-e LENGTH --length LENGTH Minimum length for the hashtag (default to 10)

Results are dumped into a file named wordlist_hashtag.txt.

A quick cracking session was able to recover the following password (please note the length of strings):

washingtonredskins

nostringsattached

popgoestheweasel

thelittleprince

ranchocucamonga

youarenotalone

eastsidemarios

rainraingoaway

genieinabottle

foodforthought

We could achieve the same result by combining dictionary words, but this is a faster method; we know for sure that such combination was used at least once.

Comments and suggestions are more than welcome!

"

"

Comments: